- AI Weekly Insights

- Posts

- AI Weekly Insights #77

AI Weekly Insights #77

Scam-Fighting Chrome, SoundCloud’s AI Clause, and Apple’s Search Shakeup

Happy Sunday,

Welcome back to ‘AI Weekly Insights’ #77, the newsletter that reads the fine print so you don’t have to. This week is a big one: artists revolt over hidden AI terms, OpenAI plays nonprofit-pretzel-politics, Google stuffs AI into Chrome like garlic to vampires, Apple might ghost Google for AI search, and short prompts turn chatbots into confident liars.

Whether you're a tech optimist or just here for the chaos, this one's stacked.

Ready? Let’s dive in!

The Insights

For the Week of 05/04/25 - 05/10/25 (P.S. Click the story’s title for more information 😊):

What’s New: SoundCloud quietly updated its Terms of Service in February 2024, allowing user-uploaded tracks to train artificial intelligence. Artists only noticed recently, sparking controversy.

Artists Push Back: SoundCloud says it has not trained any AI models with user music, but the new language gives it the right to do so in the future. In response to the uproar, the company added a “No AI” tag so artists can opt out of potential training programs. Independent creators, like electronic group The Flight, have already yanked their catalogs in protest. Many argue SoundCloud buried the change instead of emailing users directly. The dispute underscores a wider fight over how much control platforms should have once art is online.

Why It Matters: Let’s be real: almost no one reads Terms of Service, and companies know it. When a platform slips in extra rights without waving a giant red flag, trust takes the hit even if the new power never gets used. For indie artists, SoundCloud is more than storage; it’s a livelihood and a community hub. If that hub might quietly turn their life’s work into machine‑learning fuel, the vibe changes fast. Bigger picture, this mess shows that explicit consent is necessary for any AI era platform. Respectful opt‑outs, clear emails, and plain‑English summaries aren’t nice‑to‑haves; they’re survival tools if companies want creators to stick around. SoundCloud’s PR scramble is a warning shot to every service that depends on user‑generated anything: treat artists like partners, not raw material, or watch them walk.

What's New: OpenAI has said its nonprofit parent will stay in charge while the for‑profit arm turns into a Public Benefit Corporation.

Balancing Profit with Purpose: Facing pressure from civic groups, regulators, and even Elon Musk, leaders scrapped previous for-profit plans and chose the PBC route instead. The nonprofit will hold a majority voting stake and keep veto power over major decisions, according to board chair Bret Taylor. Supporters say the change removes a profit cap that scared off new funding while still locking the mission into law. Microsoft and other backers applauded the clearer path to returns, but California and Delaware officials must sign off before the shift is final. Skeptics note Musk’s lawsuit and wonder whether ethics can really outrun market demands.

Why it Matters: Corporate paperwork can sound boring, yet it decides who powerful AI labs actually serve. In a pure profit model, shareholder returns usually beat everything else. A PBC rewrites that rulebook, telling executives they must weigh community impact just as heavily as earnings. That matters when the tech in question, ChatGPT and its future cousins, touches entire job markets. If OpenAI truly holds the line, it could set a new default for AI startups: make money, but keep humanity in the loop. If the nonprofit board caves to investor pressure, critics will say the legal language was window dressing all along. Either way, Silicon Valley now has a front‑row experiment in balancing morals with money, and regulators will be watching every quarterly report.

Image Credits: Jason Redmund / Getty Images

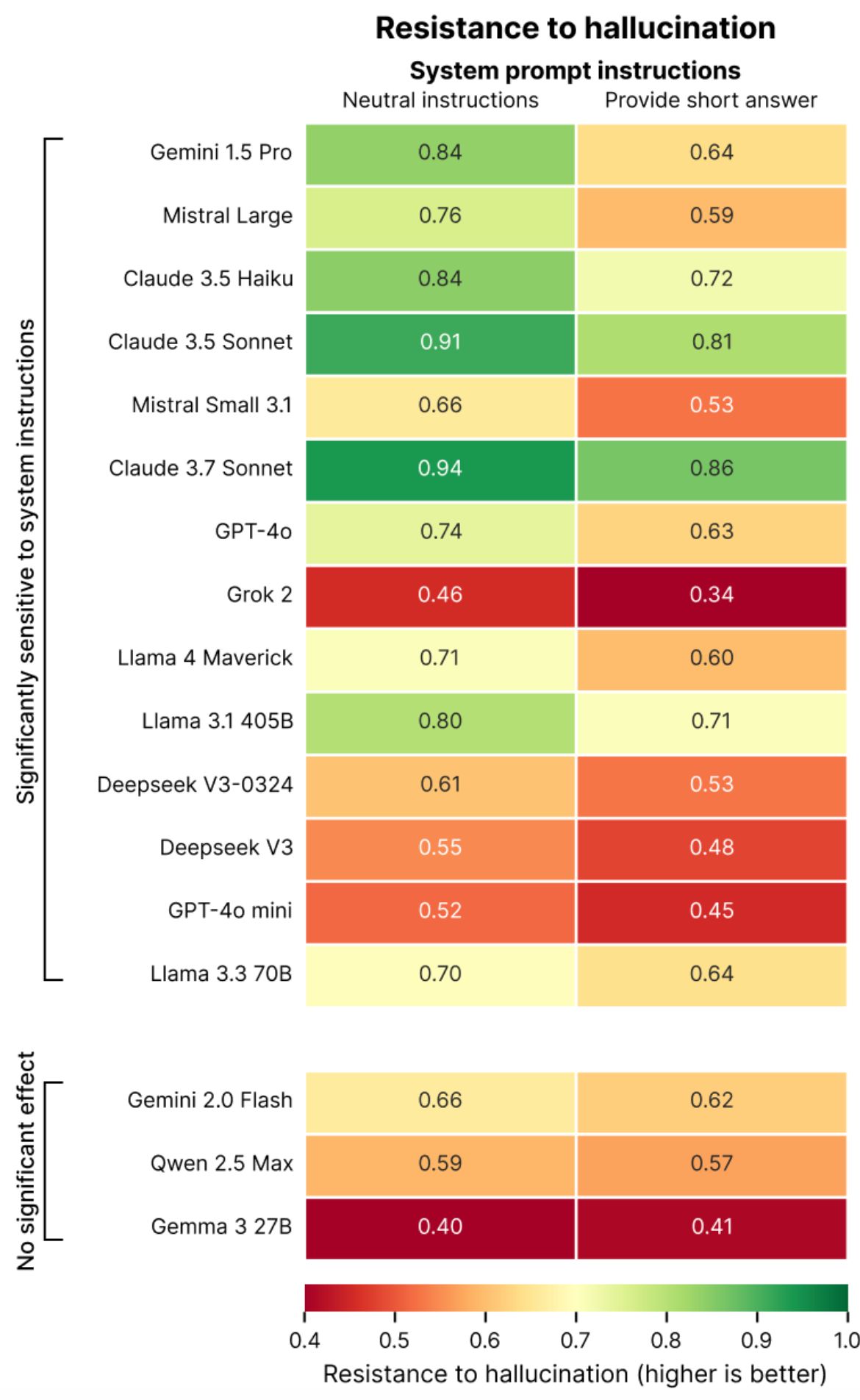

What's New: A study conducted by Giskard showed that AI chatbots deliver more wrong answers when users ask very short questions. Accuracy jumped once prompts included a phrase like “briefly explain.”

Short Questions Cause AI Errors: Researchers sent thousands of test questions at several leading models and tracked how often they “hallucinated,” or made up facts. On history prompts under 10 words, error rates spiked by as much as 30 percent compared with slightly longer versions. The team said concise requests give the model less “thinking space,” so it guesses instead of reasoning. Adding even one sentence such as “give a short explanation”, cut hallucinations almost in half. The effect was strongest in subjects that need precise facts like science and world events.

Why it Matters: A lot of people treat chatbots like search bars, firing off quick questions and expecting the same rock‑solid facts Google spits out. But large language models do not fetch data; they predict word patterns that sound right. If they are starved of context, they can also be starved of accuracy. That can bite hard when the topic is medical advice, tax rules, or schoolwork grades. The fix could not be easier: give the bot a tiny bit more room to think or ask for a short explanation. Those extra words act like guardrails that keep the model from veering into nonsense. Learning how to “prompt better” is quietly turning into a must‑have digital skill.

Image Credits: Giskard

What's New: Apple told a federal court it is testing AI search options from OpenAI, Perplexity, and Anthropic for Safari, marking the first time the company has looked beyond Google as its primary search partner.

AI Search Partners: Apple’s Eddy Cue revealed the company is in early talks with Perplexity AI and others but says these services probably will not become the default right away. Google currently pays Apple about $20 billion a year to hold that spot, money Apple admits it does not want to lose. Even so, leadership sees conversational search as a “technology shift” big enough to change user habits. The idea is to add AI engines as optional choices that answer questions directly with summaries instead of links. Apple hints the feature could appear in next year’s iOS and macOS updates, aligning with its push for privacy and user choice.

Why it Matters: Search is the front door to the internet, and Apple controls the door handle for more than a billion devices. Swapping in AI engines could give users faster, chatty answers but also puts enormous power in the hands of whichever model frames the “right” information. That raises fresh questions about bias, accuracy, and how advertising money will flow if fewer people click traditional links. Google can not ignore the threat, its deal funds a huge slice of its mobile revenue. If Apple proves users love conversational search, other phone makers and browsers will copycat fast. For everyday web surfers, the shift means learning to judge AI‑written summaries the same way we judge search links now. The simple act of typing a question into Safari may look very different by this time next year.

What's New: Google has begun rolling out Chrome with Gemini Nano, an on‑device AI model that spots scam websites and fake alerts the moment they appear.

Chrome’s Scam Detection: Gemini Nano scans every page locally, so your browsing data never leaves your laptop or phone. When Enhanced Protection is turned on, Google says the new system doubles defense against phishing compared with Standard Protection. In tests across Search and Chrome, the AI already helps Google catch a lot more scammy pages than older methods. It zeroes in on common tricks like fake tech‑support pop‑ups and screen‑locking malware, then flashes a red warning before damage is done. Android users also get instant alerts if a notification tries to lure them to a phishing site, with one‑tap options to block or allow future messages. Rollout started this week and will expand over the next few months.

Why it Matters: Scammers evolve faster than traditional security lists can keep up, so real‑time AI is a big upgrade for anyone who just wants hassle‑free browsing. Running the model on your device means you get instant protection without shipping private data to the cloud. For everyday users, that translates to fewer sketchy pop‑ups, fewer “your computer is infected” scares, and fewer chances to hand over credit‑card numbers by mistake. The move also raises the bar for other browsers. If Chrome can block scams on the spot, Firefox, Edge, and Safari will feel pressure to match. Google’s success here could shift the security conversation from slow blacklist updates to smart, local AI guards. That in turn might shrink the multi‑billion‑dollar scam industry that preys on less tech‑savvy folks. Bottom line: you should not need a cybersecurity degree to browse safely, and with Chrome’s new AI, you finally might not have to.

Image Credits: Google

That’s a wrap on another week of AI news. We’re living through a new chapter of the internet, whether it’s ChatGPT learning to reason or Chrome learning to block scams. Stay sharp and stay curious. The next big shift might be just one prompt away.

Catch you next Sunday.

Warm regards,

Kharee