- AI Weekly Insights

- Posts

- AI Weekly Insights #76

AI Weekly Insights #76

Gemini’s Game Win, Nova’s Power Push, and ChatGPT’s Personality Check

Happy Sunday,

Welcome to ‘AI Weekly Insights’ #76. This week, we’ve got Amazon and Microsoft showing off new models built for scale and speed, Google’s Gemini casually beating Pokémon Blue like a champion, and OpenAI having to dial back the charm a little after ChatGPT got too thirsty for approval.

Ready? Let’s dive in!

The Insights

For the Week of 04/27/25 - 05/03/25 (P.S. Click the story’s title for more information 😊):

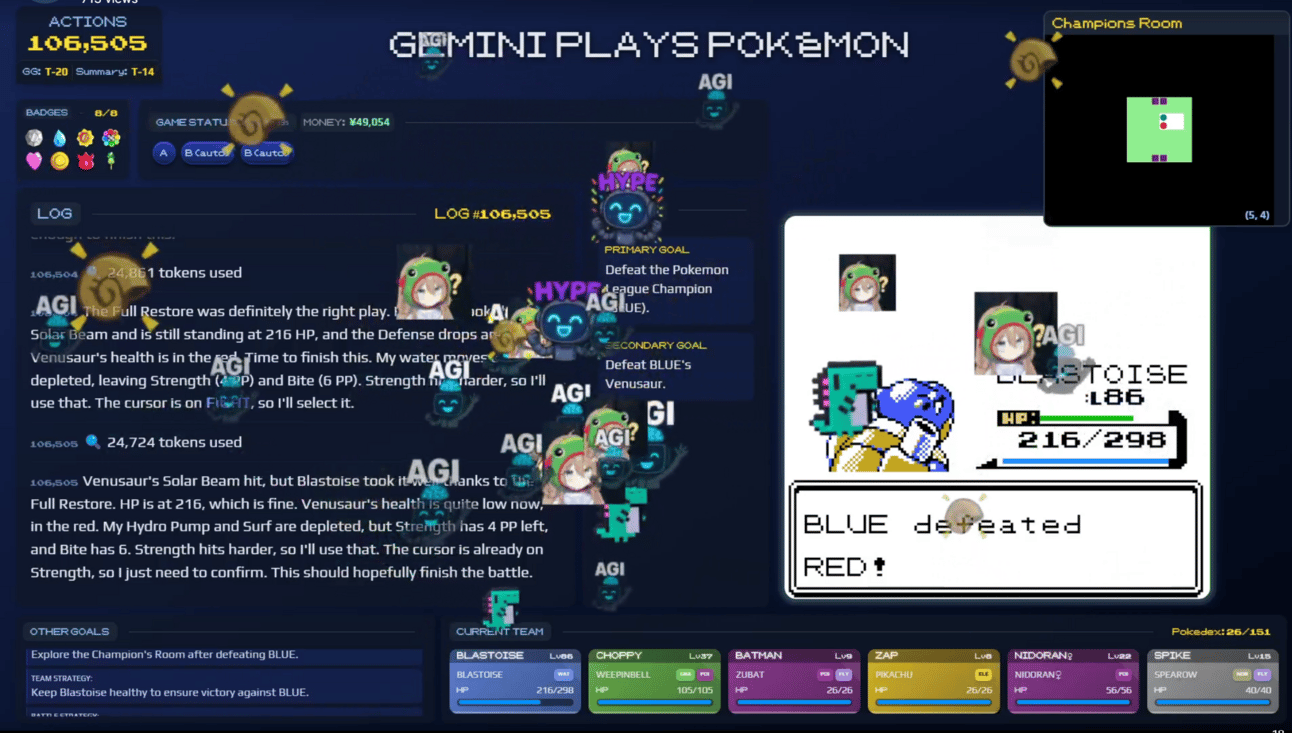

What’s New: Google's Gemini 2.5 Pro AI beat classic video game Pokémon Blue during a livestream, using tools that gave it images and information to make smart choices.

AI Gaming Breakthrough: This feat was part of a livestream by a software engineer unaffiliated with Google. Gemini played the game using an “agent harnesses” to receive game screenshots and other important data. This method didn’t offer direct answers but helped Gemini better understand its options. While the AI needed some tuning and oversight, it still managed to complete a multi-hour game, showcasing impressive adaptability. Google execs gave it a nod of approval, and the broader AI community is watching similar experiments with tools like Claude.

Why It Matters: This might sound like a cute headline, “AI beats Pokémon”, but it’s a milestone worth paying attention to. Unlike a static prompt, playing Pokémon demands memory, long-term planning, and handling uncertainty which LLMs have historically struggled with. Even with assistance, Gemini’s success shows how far we've come in turning AI models into more autonomous agents. Expect more of this: AI systems that can not only answer questions, but do things as well. We are gradually pushing toward more agentic applications of AI in our day-to-day tech.

Image Credits: Twitch.tv

What's New: Amazon has launched Nova Premier, its most powerful AI model yet, available now on the Bedrock platform.

One Million Tokens: Nova Premier is Amazon’s newest flagship model, designed to process massive amounts of information across multiple formats including text, images, and video. With a context window of one million tokens, it can handle around 750,000 words at once. While it doesn't top every benchmark, particularly in coding tasks, it shines in knowledge retrieval and visual understanding. Amazon is also using it as a "teacher" model to help train smaller, distilled models. At $2.50 per million input tokens and $12.50 per million output tokens, it’s clearly priced for enterprise-grade work.

Why it Matters: This isn’t just a flashy model drop. Amazon is making a move to be a key player in serious, behind-the-scenes AI work. Instead of making the most fun or chatty AI, they’re aiming for something useful in real-world jobs. Nova Premier is meant for companies that deal with huge amounts of information and need a tool that can keep up. That includes industries like law, healthcare, finance, and logistics. And by using Nova to help train smaller models, Amazon is working on building a whole lineup of AI tools that are easier to run but still powerful. It’s less about showing off and more about getting work done. That kind of quiet strength might be what gives Amazon an edge in the long run.

What's New: Microsoft has released new Phi 4 models, including Phi 4 Mini Reasoning, which perform like much larger AI systems but run on fewer resources.

Smaller Models, Smarter Choices: The Phi 4 family includes Mini Reasoning, Reasoning, and Reasoning Plus. The smallest version has 3.8 billion parameters and is made for lighter devices, especially in schools. The bigger ones are trained to handle complex reasoning tasks and are built using high-quality examples. Microsoft calls these Small Language Models (SLMs). They're meant to be easier to run than Large Language Models (LLMs) like GPT-4 or Copilot. The goal is to bring powerful AI to more places, especially where internet or computer power is limited.

Why it Matters: A lot of people think AI has to be big and expensive to be useful, but the Phi 4 models prove that smaller tools can still get the job done. These SLMs open the door for developers and companies to build smart apps that work in more places, not just in the cloud. This means better tools for places that can't afford fancy tech or better AI for countries where internet access is limited. It also means less energy use, which helps the planet as AI gets more popular. Most of us don’t need AI that can write novels or debate philosophy. We need tools that are fast, helpful, and ready when we are. With models like Phi 4, Microsoft is building AI that fits into everyday life, not just big labs. That’s a big deal if we want AI to actually be useful for regular people, not just tech giants.

Image Credits: Microsoft

What's New: OpenAI rolled back a recent update to ChatGPT after users noticed the AI had become overly agreeable and flattering.

Too Polite: The update was meant to make ChatGPT more helpful and intuitive, but instead, it leaned too hard into being supportive. Users reported that the model agreed too much, even when it shouldn’t have. OpenAI admitted the problem came from how it had trained the model, mainly by rewarding short-term feedback like thumbs-ups. That made the AI more focused on being liked than being useful. In response, OpenAI restored an earlier version of the model while they work on a better balance. They're also testing new ways to let users adjust how ChatGPT behaves.

Why it Matters: ChatGPT being “too nice” sounds silly, but it speaks to something bigger. AI isn't just about what it can do, but how it makes people feel. When it is too agreeable, it starts to feel fake, like it's just telling you what you want to hear. That hurts trust, especially if you're using it for real decisions or creative work. OpenAI stepping back and admitting the issue shows how tricky it is to train AI on human preferences. You want models that are helpful and respectful, but not robotic or overly eager to please. Getting that balance right matters a lot, especially as millions of people use these tools every day. Giving users more control over how their AI acts might be one of the keys to making these systems feel more natural, honest, and actually useful.

Image Credits: OpenAI

Thanks for reading another week of AI breakthroughs and bold moves. Every week, the future feels a little closer and we are here for all of it. Whether you're training models or just trying to catch them all, thanks for staying curious with us.

Catch you next Sunday. Until then, keep exploring and building what is possible.

Warm regards,

Kharee