- AI Weekly Insights

- Posts

- AI Weekly Insights #75

AI Weekly Insights #75

AI Art, Memory Machines, and Lifelike Avatars

Happy Sunday,

This week marks ‘AI Weekly Insights’ #75, and it is a good one. We are digging into some of the biggest shifts happening across the AI world right now, from powerful new tools for creators, to smarter ways to search your own computer, to chatbots that do not just talk, but actually look human.

Ready? Let’s dive in!

The Insights

For the Week of 04/20/25 - 04/26/25 (P.S. Click the story’s title for more information 😊):

What’s New: OpenAI has made its upgraded image generator, gpt-image-1, available to developers through their API.

Creative AI Explosion: The new image generator model became popular inside ChatGPT, letting people make everything from Ghibli-style art to action figure photos. In just one week, users made over 700 million images. Now developers can use it too for apps, websites, and services. They can adjust the style of the images, set rules for what can be made, and control how fast and fancy the results are. The cost depends on how fast and high-quality you want the pictures to be. Big companies like Adobe, Canva, Figma, and Instacart are already testing it. OpenAI also mentions that all images are watermarked with data to make them easy to identify by appropriate tools.

Why It Matters: This move could change how a lot of creative work gets done online. Whether you are designing stores, apps, ads, or art, it is now way easier to add AI images into your workflow. But there are real concerns too. How do we keep track of what’s real when AI images are everywhere? What happens to human artists trying to stand out? OpenAI added some tools to help with safety, but it will be up to developers and companies to use them responsibly. As more platforms adopt AI art, trust and transparency will be bigger challenges than ever. This feels like a huge leap forward for creativity, but it is also a reminder that every big leap comes with big questions.

Image Credits: OpenAI

Image Credits: OpenAI

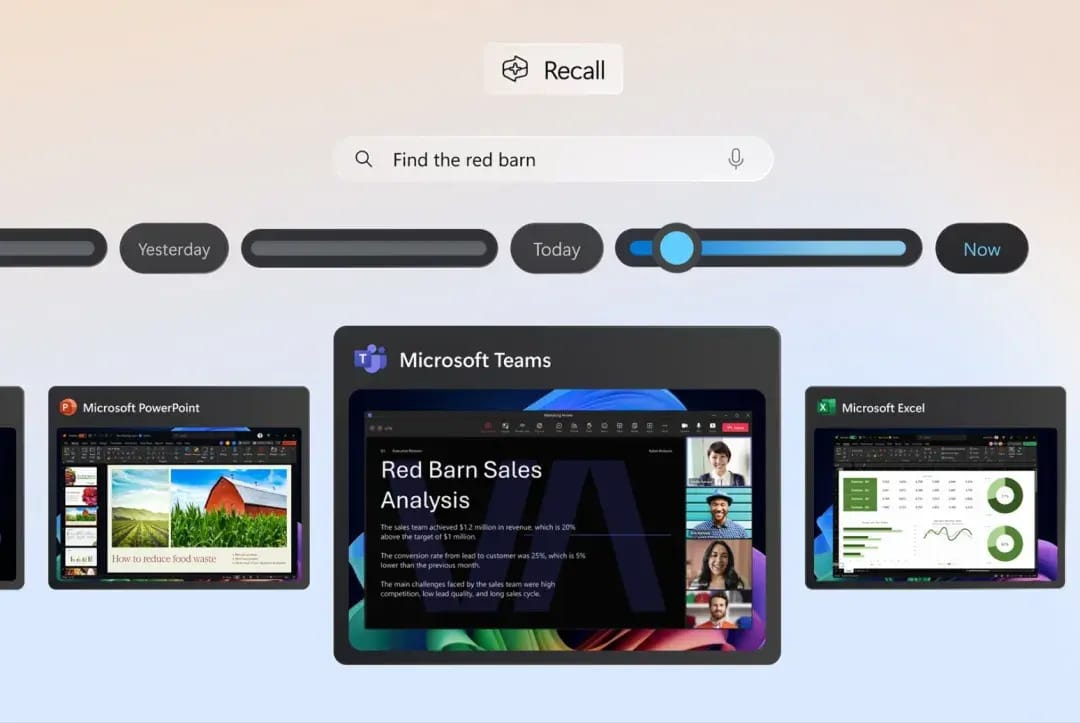

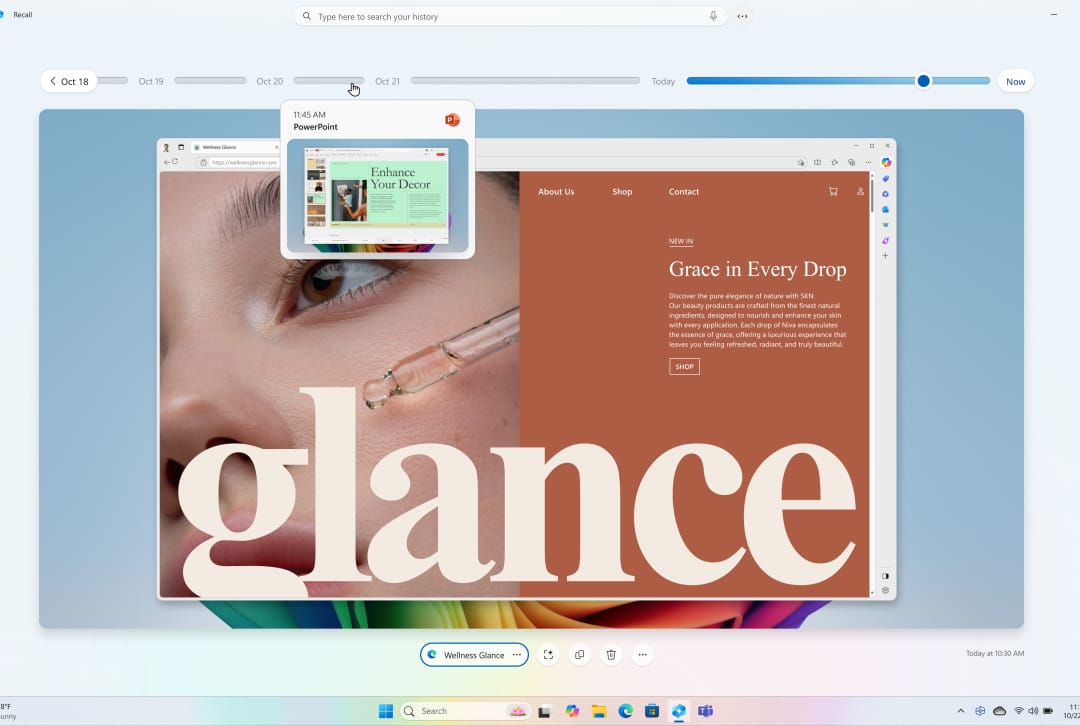

What's New: Microsoft has dropped two big AI features for Copilot Plus PCs: Recall, a screenshot memory tool, and a new AI-powered Windows search that uses natural language to find files faster.

Smarter PCs, Smarter Searches: Recall takes snapshots of things done on your computer, like websites you visit or documents you open. Later, you can search through activity using regular language instead of digging through folders. This feature was delayed at first because of privacy worries, but it is now opt-in, with stronger protections like encryption. Alongside Recall, Microsoft’s new Windows search upgrade lets you type things like "show me the spreadsheet from last Thursday" and find exactly what you need. There is also a new Click to Do tool, similar to Google's Circle to Search, that lets you quickly act on text or images by clicking directly on them. These updates are starting on Copilot Plus PCs and will roll out more widely over time.

Why it Matters: Microsoft is making a real push to turn your computer into a true AI assistant. Being able to "search your memory" or ask your PC for something in plain language could save a ton of time and frustration. But it also brings up serious privacy questions. Even with encryption, storing detailed records of what you do all day feels risky if companies do not handle it carefully. As AI gets more personal, the balance between convenience and privacy is going to get harder to manage. Done right, features like Recall could make tech feel more human and helpful. Done wrong, they could make people even more suspicious about how their data is being used. This is a glimpse of where personal computing is heading; faster, smarter, and way more tied to the choices we make about trust.

Image Credits: Microsoft

Image Credits: Microsoft

What's New: Character.AI has introduced AvatarFX, a new AI model that brings animated video characters to life. The tool is currently in closed beta.

AI-Powered Avatars: AvatarFX can turn either AI-generated art or uploaded pictures into moving, speaking avatars. Users can choose from human-like characters or cartoon-style animations, giving flexibility for different types of conversations. Unlike general video tools like Sora, AvatarFX is focused specifically on making chatbots feel more lifelike. Character.AI says every video will be watermarked and will blur facial features to reduce deepfake risks, but these safety features have not been fully stress-tested yet. The company sees this as a way to make AI companions, tutors, and customer service bots feel more natural and engaging.

Why it Matters: AvatarFX is a glimpse into a future where talking to AI feels and look more natural. Imagine learning from a personalized AI teacher who smiles and nods, or getting help from a support agent that actually feels like a real person. It is easy to see how this could make interactions easier and more enjoyable. But when AI starts to wear a human face, the emotional stakes change. It gets easier to forget that there is no real person on the other side. Character.AI already knows how quickly users form bonds with text-based bots. Adding humanlike video could make those connections stronger, but also a lot messier. There is a real risk of people trusting or depending on AI in ways they never would have with a faceless chatbot. The tech is exciting, no doubt. But the real challenge will be making sure we are building tools that help people, not ones that quietly blur the line between connection and illusion.

What's New: An Australian radio station revealed it had been using an AI-generated DJ for months without listeners realizing. The AI, called Thy, hosted a four-hour daily segment on Sydney’s CADA station.

AI on the Airwaves: The station used AI voice technology from ElevenLabs to clone the voice of a real employee. "Workdays with Thy" played music, shared commentary, and kept up the usual radio chatter without listeners suspecting anything unusual. Neither the station's website nor the broadcast itself disclosed that Thy was an AI, sparking concerns about transparency. The segment reportedly reached over 72,000 listeners daily before the reveal. Industry voices, including leaders from Australia’s media sector, criticized the station for blurring the line between human hosts and AI without public consent.

Why it Matters: This story feels like a warning sign. If an AI can smoothly replace a radio host without anyone noticing, it raises real questions about trust and authenticity. Radio has always been about the human connection. The feeling that someone real is talking to you and reacting to the world. When that human element disappears without disclosure, it chips away at that bond. There is also the bigger issue of job security. If AI voices can be cheaper and faster, what happens to all the real DJs, voice actors, and hosts who built careers on authenticity? AI has a place in media, no doubt. But it needs to be used openly and responsibly. Listeners deserve to know when they are connecting with a person and when they are not. Otherwise, trust gets harder to win back every time we lose it.

Image Credits: The Sydney Morning Herald

Thanks for reading another week of AI breakthroughs and bold moves. Every week, the future feels a little closer and we are here for all of it. Whether you are here to stay sharp, stay creative, or just stay curious, we are lucky to have you on this journey with us.

Catch you next Sunday. Until then, keep exploring and building what is possible.

Warm regards,

Kharee