- AI Weekly Insights

- Posts

- AI Weekly Insights #70

AI Weekly Insights #70

Nvidia's AI Power, Robbie the Robot Dog, and Minecraft Meets Machine Learning

Happy Sunday,

It’s time for ‘AI Weekly Insights’ #70, and this week’s lineup is full of surprises. We’ve got Nvidia dropping desk-friendly AI supercomputers, a robot dog turning museum tours into something out of sci-fi, and OpenAI leveling up how machines talk and listen. Plus, a Minecraft build-off powered by AI might just change how we think about benchmarking creativity.

Ready? Let’s dive in!

The Insights

For the Week of 03/16/25 - 03/22/25 (P.S. Click the story’s title for more information 😊):

What’s New: OpenAI has released new models that improve how AI talks and listens. These updates promise more accurate voice responses and better understanding of speech.

Smoother Speech, Smarter Listening: OpenAI's latest models, “gpt-4o-mini-tts” (for text-to-speech) and “gpt-4o-transcribe” (for turning speech into text), aim to deliver more realistic and adaptable outputs. The speech model lets developers change the AI’s tone, for example, making it sound like a "mad scientist" or a "mindfulness teacher". The transcription model replaces OpenAI’s older “Whisper” model and works better with accents and harder to understand audio. There’s also a smaller model (gpt-4o-mini-transcribe) for faster use. While the tech has improved, OpenAI isn’t sharing these models publicly yet, saying they are larger models with more complexity and need for thoughtful deployment. User can try the new models at openai.fm.

Why It Matters: These updates could make a difference in how we talk to AI. Think about calling a customer service line and getting help from a voice that sounds more human and understands you better, even if you have an accent or there’s background noise. This kind of progress could make it easier for people to connect with AI. OpenAI’s choice to keep the models private also shows they’re being careful. They're trying to avoid problems that could come from rushing things out too fast. As AI becomes a bigger part of our daily lives, from phones to smart homes, these tools could shape how we interact with technology in more personal ways.

Image Credits: OpenAI

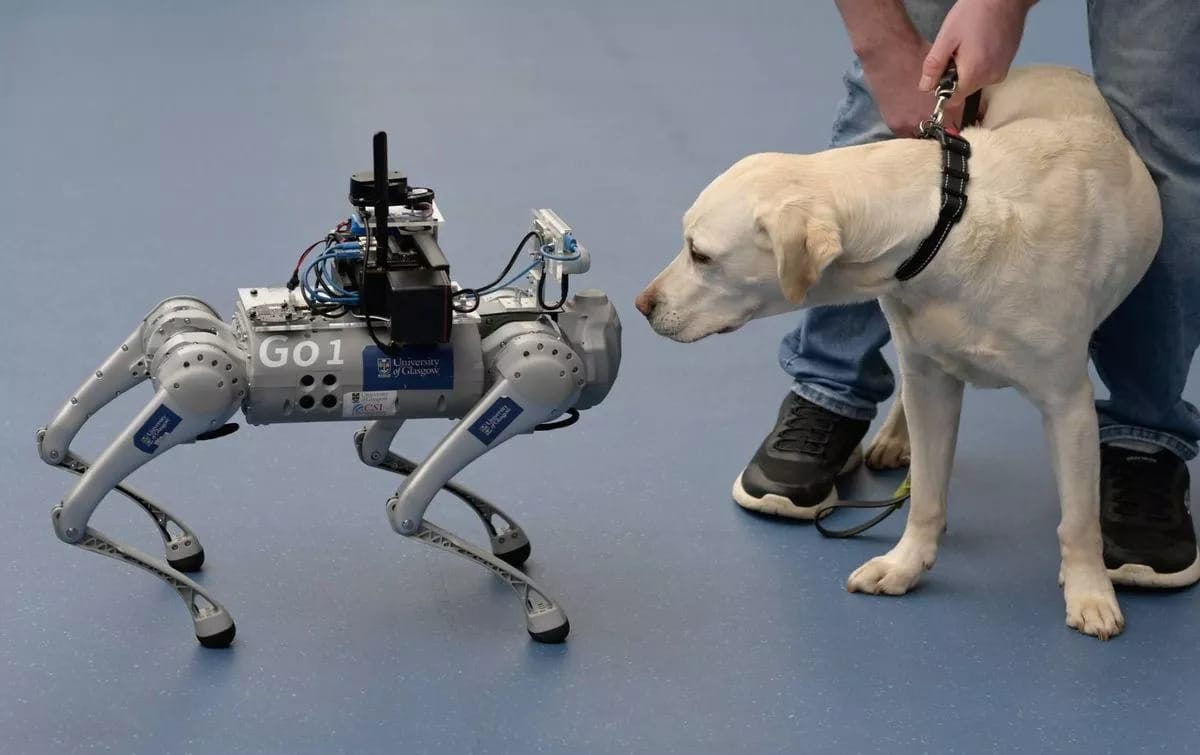

What's New: Researchers in Glasgow have created a robot guide dog named Robbie to help blind and visually impaired people move around public spaces, starting with museums.

A Smarter Kind of Support: Robbie is built on a military-grade robot base, costs around £8,000, and is equipped with a camera, microphones, and a ChatGPT-style language model. It uses mapping tech to understand where it is and can talk to users through an earpiece. In the Glasgow Hunterian Museum, Robbie guided visitors through exhibits, answering questions and describing artifacts. The idea is that it could help in other public places too like airports, grocery stores, or galleries. It’s also being pitched as a temporary solution for people stuck on long waitlists for guide dogs.

Why it Matters: This is the kind of innovation that feels both hopeful and practical. Guide dogs can be life changing for people, but they’re expensive and hard to come by. Robbie isn’t here to replace them, but it could fill the gap for people waiting or offer support in spaces where regular dogs can’t easily go. The fact that it can communicate, understand its environment, and adapt to new places makes it more than just a cool robot, it’s assistive tech that’s actually useful. The bigger win here is the focus on inclusive design. It isn’t just a cool project, it’s being built with input from organizations like RNIB Scotland to meet real needs. And if it helps someone feel more independent walking through a museum or catching a flight, that’s a big step in the right direction.

Image Credits: Julie Howden / PA

What's New: At GTC 2025, Nvidia introduced two new AI computers, DGX Spark and DGX Station, bringing supercomputer-level performance to individuals and small teams.

AI Power, Now Personal: These new machines are built to help developers and researchers run advanced AI tools without needing massive cloud setups. DGX Spark is already available and can handle complex tasks at incredible speed. DGX Station, which comes out later this year through companies like Dell and HP, offers even more memory and performance. Both are part of Nvidia’s effort to make cutting-edge AI tools more accessible not just for big tech companies, but for smaller teams and individuals working on everything from new apps to research projects.

Why it Matters: Previously known as Project DIGITS, this isn’t just about faster chips, it’s about changing who gets to build with AI. Until now, serious AI development often meant huge cloud bills or access to specialized hardware. With DGX Spark and Station, Nvidia’s making high-end AI tools something that more people can actually own and use locally. That could speed up innovation in fields like medicine, robotics, or even climate modeling. Of course, there are big questions too: how do we manage the energy these systems require, and what kind of safeguards do we need as more people get access to this level of AI power? But overall, this feels like a moment where cutting-edge hardware is stepping out of the data center and into the hands of more creators.

Image Credits: NVIDIA

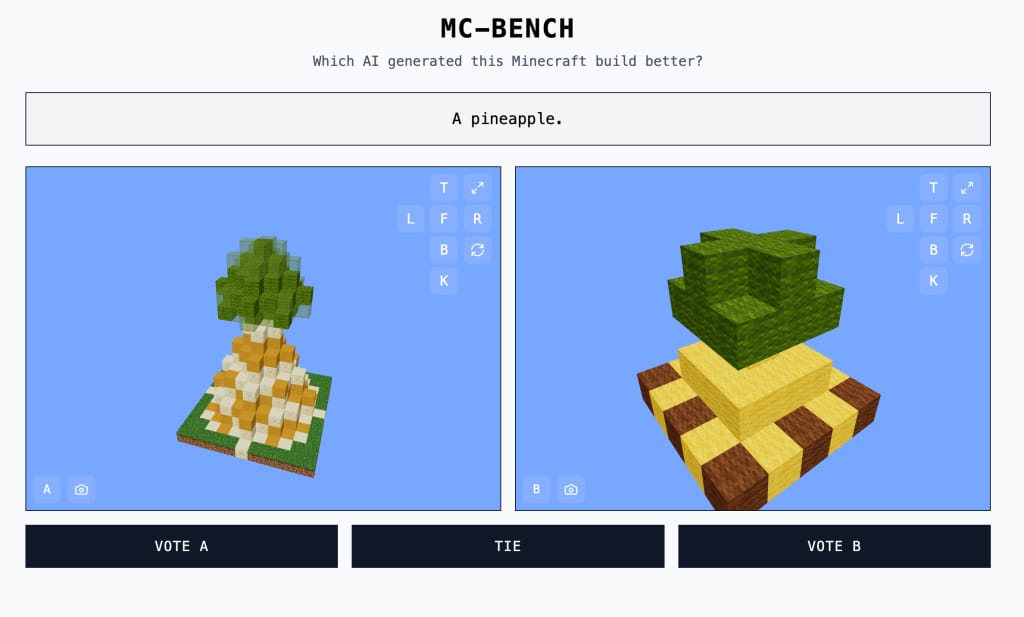

What's New: A high schooler has launched a fun new website that lets people see how different AI models perform in Minecraft and vote on which one built it better.

Minecraft Meets Machine Learning: Adi Singh, a 12th grader, built MC-Bench, a platform that tests how AIs respond to prompts like “build a snowman” or “design a beach hut” by creating structures in Minecraft. Visitors can view the builds side by side and vote for their favorite. While the challenges are simple, they offer a fun and visual way to explore how AIs approach creativity and design. The project is supported by volunteers and has caught the attention of major tech names like OpenAI, Google, and Anthropic, though they aren't officially involved.

Why it Matters: This is a clever reminder that evaluating AI doesn’t have to be limited to spreadsheets and academic benchmarks. Games like Minecraft are not only familiar to millions of people, but also give us a relatable way to see what AI is actually doing. MC-Bench invites anyone to explore how AI models interpret language, follow instructions, and make creative decisions. It shows that even simple prompts can reveal a lot about an AI’s strengths and gaps. More importantly, it opens the door to public involvement in how we judge AI progress. That matters in a world where these systems are becoming part of our everyday lives. And the fact that this idea came from a high schooler? It’s a great example of how curiosity, creativity, and accessible tools can come together to shape how we all interact with AI.

Image Credits: Minecraft Benchmark

Thank you for diving into this week’s AI insights! As AI continues to evolve at lightning speed, these conversations matter more than ever. Whether you’re here for the breakthroughs, the debates, or the sheer curiosity of what’s next, your support means a lot.

Let’s keep the discussions going, and as always…

Until next Sunday, stay curious and engaged!

Warm regards,

Kharee