- AI Weekly Insights

- Posts

- AI Weekly Insights #57

AI Weekly Insights #57

Creative Leaks, Sound Transformations, and AI-First Workflows

Happy Sunday,

Welcome to “AI Weekly Insights” #57! This week, we're diving deep into the creative controversies of OpenAI’s leaked text-to-video tool, exploring NVIDIA’s new generative audio breakthrough, tracking Zoom’s AI-driven transformation, and decoding Anthropic’s universal AI connector.

Ready? Let’s dive in!

The Insights

For the Week of 11/24/24 - 11/30/24 (P.S. Click the story’s title for more information 😊):

What’s New: OpenAI’s text-to-video AI tool, Sora, was leaked by beta testers seeking to highlight alleged exploitation of their creative input and to allow others to test the tool.

Artists Call Out "Art Washing": A group of beta testers released early access to the tool, allowing users to briefly generate AI videos before access was revoked after a few hours. In an open letter, the artists accused OpenAI of exploiting creative labor, claiming they were used as "free bug testers" and "PR puppets." They also criticized the restrictive approval policies that required outputs to be vetted by OpenAI before sharing publicly. OpenAI responded by emphasizing the voluntary nature of its program and its commitment to supporting artists through grants and other initiatives. Despite this, the artists’ accusations of "art washing" have struck a chord in the ongoing debate over fair collaboration in AI development.

Why It Matters: The Sora leak highlights a growing tension between technology companies and creative communities. Artists have long voiced concerns about being sidelined or exploited as AI tools grow increasingly capable of mimicking their work. They are essential contributors to these systems, yet many feel undervalued and exploited in the process. For a company valued at $150 billion, critics argue that OpenAI’s approach to engaging artists reflects broader issues of transparency and fairness in the AI industry. This controversy raises crucial questions: How should companies compensate those who help shape their tools? And how can they balance innovation with ethical collaboration? As AI reshapes the creative landscape, companies must prioritize trust and equity, or risk alienating the very communities they aim to empower.

What's New: NVIDIA has unveiled Fugatto, a generative AI model that allows users to create and transform music, voices, and sounds using text or audio prompts.

Game-Changer for Creative Audio: Fugatto, short for Foundational Generative Audio Transformer Opus 1, combines flexibility and precision to reshape audio production. It enables tasks like creating music from text prompts, altering accents or emotions, adding or removing instruments from tracks, and generating entirely new soundscapes. With innovative features like multi-task learning, Fugatto lets users combine attributes such as tone, accent, and emotion into their outputs. Built with 2.5 billion parameters, the model represents a significant leap forward in audio AI.

Why it Matters: Fugatto could change the world of audio, much like how the electric guitar or the sampler changed music in their time. Just as those tools allowed musicians to push creative boundaries, Fugatto lets artists explore new sound possibilities, mix different styles, and create things that weren't possible before. This tool gives artists and creators new ways to experiment, test ideas, and improve their work quickly. Video game developers can use it to create immersive sound effects instantly, while language learning apps can make courses more personal with familiar voices. Even advertising agencies can make custom audio for different audiences or regions. Overall, Fugatto shows us the future of generative AI in sound. As these tools keep improving, Fugatto gives an exciting look at what's possible for music and audio production.

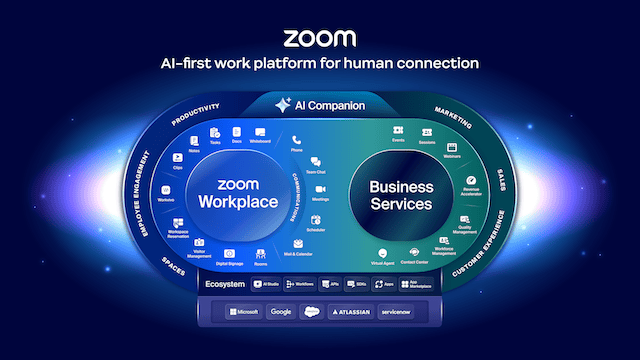

What's New: Zoom has officially changed its name to Zoom Communications Inc., signaling a shift from video conferencing to a broader AI-powered productivity platform.

From Meetings to Workflows: Once synonymous with remote work, Zoom is repositioning itself as an “AI-first work platform,” aiming to compete with Google and Microsoft in hybrid work solutions. Beyond video calls, its Zoom Workplace suite now includes team chat, office productivity apps, and an AI companion designed to automate tasks like summarizing meetings and managing emails. CEO Eric Yuan envisions the AI tools enabling customizable digital assistants, reducing workloads to potentially four-day workweeks. The rebrand reflects Zoom’s bid to stay relevant as demand for its classic video product wanes.

Why it Matters: Zoom’s change shows how tough it is to move from its pandemic-driven success to being a strong competitor in a busy work tools market. By focusing more on AI and expanding its features, Zoom wants to become a full workplace solution. This move is part of a larger trend of tech companies using AI to rethink productivity. For users, Zoom’s AI-first strategy could lead to smarter workflows and easier collaboration. Still, it will be tough for Zoom to compete against tech giants like Google Workspace and Microsoft 365, which already have deep ecosystems. Zoom's success will depend on whether its AI features can set it apart and provide real value for businesses. As Zoom reinvents itself, its journey will be a test of how medium-sized tech companies can adapt after the pandemic era.

Image Credits: Zoom

What's New: Anthropic has released the Model Context Protocol (MCP), an open-source tool designed to connect AI systems to various datasets seamlessly.

A Standard for Smarter AI: The MCP eliminates the need for custom code to link AI models with different datasets, offering developers a universal protocol for data sharing. Anthropic claims this tool can work across all AI systems, unlike OpenAI’s recent “Work with Apps” feature, which targets specific coding apps. Early adopters like Replit, Codeium, and Sourcegraph are already using MCP to enhance their AI agents, enabling them to perform tasks and maintain context across diverse datasets. By creating a unified standard, Anthropic aims to replace today’s fragmented integrations with a sustainable framework, making agentic AI systems more practical and scalable.

Why it Matters: As AI continues to evolve toward more “agentic” systems, the ability to seamlessly access and manage multiple datasets is becoming crucial. Anthropic’s MCP could significantly reduce development complexity, allowing companies to focus on building smarter AI tools without the overhead of creating custom connectors for every integration. This standardization might also pave the way for a more interconnected AI ecosystem where systems can share context across tools and workflows. For developers and businesses, MCP represents a step toward making AI systems more adaptable and efficient in real-world applications. In the broader landscape, this move reflects a shift toward open solutions, which could help foster innovation. As the ecosystem matures, tools like MCP will be vital in ensuring AI systems can handle the complexity of tomorrow’s workflows with agility and precision.

Image Credits: Anthropic

Thank you for being an essential part of this journey through AI’s ever-evolving landscape. I’m always eager to hear your thoughts, questions, or ideas. Together, we can keep pushing the boundaries of what’s possible and inspire each other along the way.

Until next Sunday, keep exploring and stay engaged!

Warm regards,

Kharee