- AI Weekly Insights

- Posts

- AI Weekly Insights #48

AI Weekly Insights #48

Meta's AR Magic, AI's New Voices, and Rabbit's Bold Comeback

Happy Sunday,

It's the 48th edition of AI Weekly Insights, and we're serving up a big helping of the latest in AI and tech! From OpenAI’s new voice mode that'll have you chatting with your AI like an old friend, to Meta Connect 2024’s exciting tech reveals (spoiler: AR glasses are looking sleek), this issue is packed. Grab your coffee, let’s dive in and explore what’s shaking up the AI world this week!

Ready? Let’s dive in!

The Insights

For the Week of 09/22/24 - 09/28/24 (P.S. Click the story’s title for more information 😊):

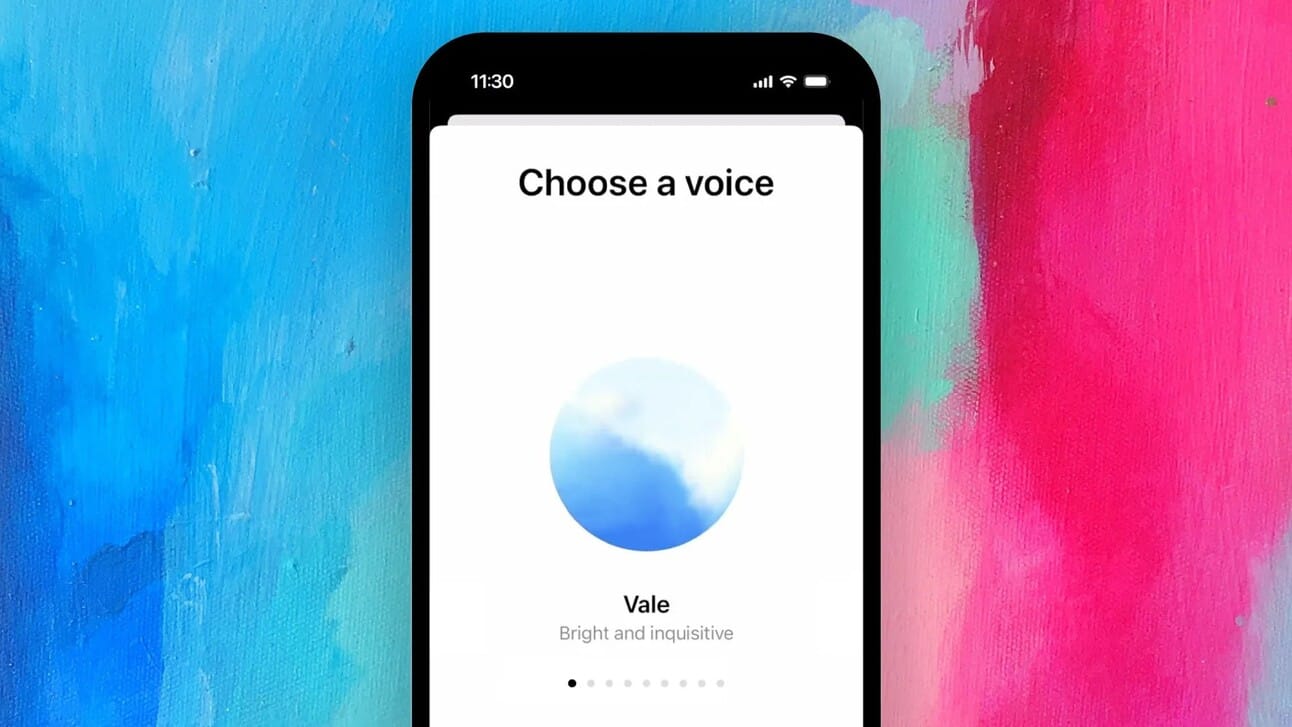

What’s New: OpenAI has launched its Advanced Voice Mode for ChatGPT Plus and Teams users, bringing a new level of natural conversation to AI interactions.

Smooth Conversations: The Advanced Voice Mode brings nine distinct voice options, including nature-inspired ones like Arbor and Vale, allowing users to choose voices that resonate with them. The feature integrates custom instructions and Memory from ChatGPT's text mode, enabling the AI to remember previous interactions and provide contextually relevant responses. OpenAI also refreshed the interface with a sleek animated blue sphere, adding a modern touch to user interactions.

Why It Matters: After getting hands-on with AVM, I can say it's really a game-changer. I shared it with my family, and everyone was amazed by what it can do. We had the AI embody different characters, run a mini Dungeons and Dragons session, play 20 questions, and even experiment with different languages and accents. It's not just entertaining; it feels like interacting with a smart companion that can adapt. The prospect of upcoming features like video and screen sharing, which OpenAI hinted at in demos back in May, could take this experience to another level. Imagine the possibilities for education, gaming, or even virtual meetings!

While OpenAI has been in the news for other reasons, like the company’s CTO departure and discussions about its organizational structure, this development stands out as a notable leap forward in AI capabilities. The technology is rapidly evolving and soon might become an even more integral part of our daily lives.

Image Credits: OpenAI / TechCrunch

What's New: Meta Connect 2024 was a showcase of Meta's continued expansion into AI-driven products and immersive technology. CEO Mark Zuckerberg led the event, unveiling major updates to Meta’s hardware lineup and introducing new AI features.

Key Highlights:

Orion AR Glasses: Meta revealed its Orion augmented reality (AR) glasses, which offer an impressive combination of style and cutting-edge technology. With a sleek design that looks like everyday glasses, Orion uses micro LED projectors to beam images directly in front of your eyes. The glasses feature AI capabilities similar to Ray-Ban smart glasses but add visual elements, like labeling objects in your environment. They also pair with a wireless compute puck and a neural wristband, which detects gestures like punches.

Meta AI Updates: Meta AI now includes voice interactions, allowing users to engage with the assistant across their various platforms using voices from celebrities such as John Cena, Kristen Bell, and Dame Judi Dench. Meta AI can now also edit photos via text prompts, making it easier to create personalized content. Additionally, Meta’s latest AI model, Llama 3.2, now has multimodal capabilities (it can interpret images, charts, and graphs and respond to questions about visual content).

AI-Generated Content on Social Media: Meta introduced a new feature called "Imagined for You", which brings AI-generated content to your Instagram and Facebook feeds based on your interests. Users can interact with this content to visualize themselves in fantasy scenarios, like as video game characters or astronauts.

Meta Quest 3S: Meta’s new Quest 3S is a more affordable version of the Quest 3, starting at $299.99. It offers many of the same features as the higher-end model, but lacks a depth sensor and has lower resolution screens. This model is aimed at bringing the VR experience to a broader audience by maintaining high performance while keeping costs down. With this launch, Meta is discontinuing both the Quest 2 and Quest Pro.

Ray-Ban Smart Glasses: Meta’s Ray-Ban smart glasses are receiving updates that make them even more practical. New features include real-time language translation, a Reminders feature for everyday tasks, and the ability to handle AI video processing on the go. The glasses will also have new styles, including transparent frames and transition lenses.

Why It Matters: Meta Connect 2024 marked a significant leap not only for the AI space but also in advancing VR, AR, and MR technology. The Orion AR glasses are particularly noteworthy. Imagine integrating AI features like ChatGPT’s Advanced Voice Mode (mentioned above) or Rabbit’s LAM (mentioned below) into Orion. Meanwhile, Meta AI’s evolution with Llama 3.2 and its emphasis on multimodal interactions position the platform as a formidable alternative to bots like ChatGPT or Gemini. It's clear the company wants to provide top-tier AI-driven experiences. This is particularly evident in their push to integrate AI-generated content directly into users’ social media feeds, something that could reshape how we interact with those platforms. Overall, Meta is strategically positioning itself as a leader in the next wave of consumer tech.

Image Credits: Meta

What's New: Rabbit is gearing up to release a major update for its r1 device on October 1, introducing the Large Action Model (LAM) that promises to transform the device into a versatile AI assistant.

Action-Based Model: The r1 device debuted earlier this year with high hopes, but stumbled due to limited functionality and lackluster integrations. Now, Rabbit is finally bringing its LAM that enables the r1 to interact with any website. The company's CEO, Jesse Lyu, acknowledged the early missteps and is setting more realistic expectations for the LAM's capabilities. It's still described as a "playground version" that requires some prompt engineering to fully appreciate its benefits.

Why it Matters: The LAM was supposed to be the r1's biggest selling point, and at the time, it seemed almost too good to be true. I think the idea of an AI that can take actions on our behalf represents a next frontier in the space. Imagine a digital assistant that doesn't just answer your questions but actually gets things done for you. Rabbit is under a lot of pressure to get this right too, especially with recent reports indicating only 5,000 daily users (contrasting the hundreds of thousands of preorders). If the update delivers on its promises, it could reignite interest in the device and possibly redefine expectations from physical AI hardware.

Image Credits: Brian Heater

Image Credits: Rabbit

Your enthusiasm and curiosity drive our exploration through AI's rapidly evolving landscape. I always look forward to your insights, questions, and assistance in spreading these discoveries within the AI community.

Until next Sunday, keep exploring and stay engaged!

Warm regards,

- Kharee