- AI Weekly Insights

- Posts

- AI Weekly Insights #36

AI Weekly Insights #36

Sustainability Strides, Design Dilemmas, and Iconic Voices

Happy Sunday,

The 36th edition of "AI Weekly Insights" has landed! This week, we're diving into some developments that are sure to catch your eye. Our topics include Google's environmental impact, Figma's AI design tool controversy, a new real-time AI voice assistant, ElevenLabs' iconic voices for digital narration, and a guide to navigating AI scams.

Let’s get into the insights!

The Insights

For the Week of 06/30/24 - 07/06/24 (P.S. Click the story’s title for more information 😊):

What’s New: Google has released its Environmental Report for 2024, offering insights into the company's environmental impact, including the resource usage of its AI technology.

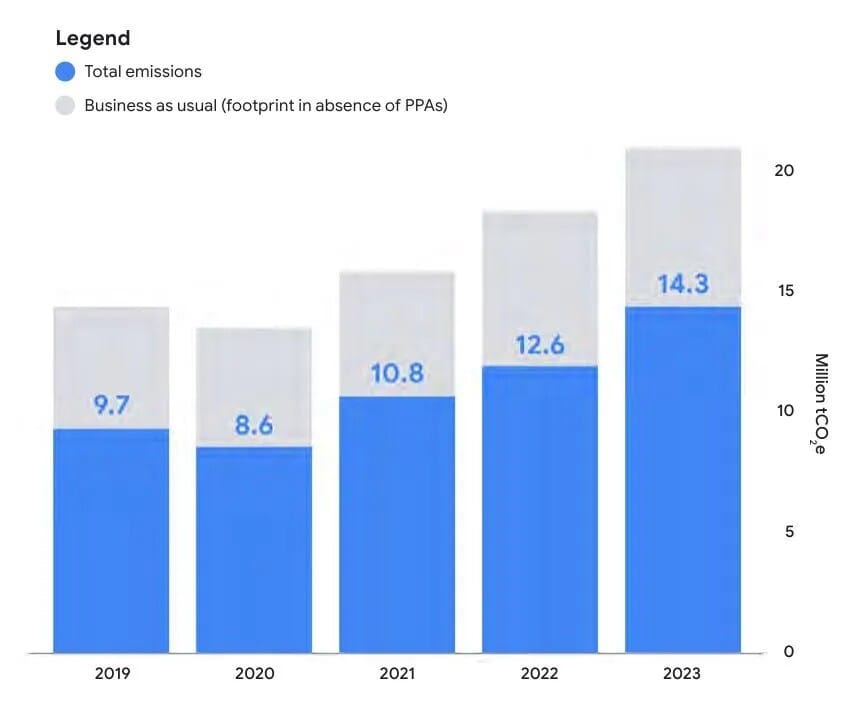

AI for Sustainability: The report provides a comprehensive look at Google's environmental impact across all its products. It highlights how AI is being used to address climate change and manage resource consumption. As the demand for AI has grown, so has the energy usage and emissions associated with it.

Why It Matters: Google is making significant strides in applying AI and other technologies to combat climate change. It's encouraging to see the company committed to positive change and sharing its progress and statistics. However, the report acknowledges that energy consumption has increased steadily year-over-year with AI resource usage. Google aims to achieve net-zero emissions by 2030 but admits there is "uncertainty" about this goal due to the growing demand for AI. While this is concerning, it's important that these issues are being addressed. The future environmental impact of AI is uncertain for many companies, and it needs to remain a priority alongside innovation, privacy, and security. For more details, you can check out the full report here.

Image Credits: Google

What's New: Figma released a new AI tool to help users quickly create designs from prompts, but had to remove it due to copying Apple's weather app design.

Make Designs: The offending designs were shared on X by the CEO of Not Boring Software, who posted a side-by-side comparison of the AI-created design and Apple’s application. Figma's CEO quickly took to X to explain the situation, blaming tight deadlines and the models used for the tool (OpenAI’s GPT-4o and Amazon’s Titan Image Generator G1). Despite this setback, the company remains committed to integrating AI into its platform.

Why It Matters: This incident highlights ongoing concerns about the data used to train AI models. Figma has promised to ensure there is "sufficient variation" before re-releasing the tool. As the company advances its AI efforts, it has discussed training its own models and allowing users to opt-out if they don’t want their content used for Figma’s training. This approach aims to address ethical concerns and ensure user trust while continuing to innovate in AI-driven design tools. Below are Apple’s Weather app (left) and 3 designs by the “Make Designs” tool (right).

Image Credits: Andy Allen / X

What's New: French AI developer Kyutai has introduced a real-time voice assistant with a demo comparable to the Advanced Voice Mode shown by OpenAI in May.

Moshi: The assistant, named Moshi, is designed to provide lifelike conversations, capable of speaking in various accents and emotional styles. It can also listen and speak simultaneously. Additionally, it’s optimized to run on devices without needing to interact with the internet, enhancing privacy and security.

Why It Matters: The speed of the assistant's responses shown in the demo is highly impressive, showcasing a future where conversations with AI are much more natural. The fact that Kyutai is open-sourcing the project is a huge win for the AI community. This move not only promotes transparency but also enables developers to innovate upon the existing framework. There were a few quirks, but I enjoyed my short demo with Moshi.

What's New: AI startup ElevenLabs has launched a new feature on its Reader App, allowing users to listen to digital text narrated by the voices of Hollywood icons like Sir Laurence Olivier, Judy Garland, James Dean, and Burt Reynolds.

Iconic Voices: Through agreements with the estates of these stars, ElevenLabs has added their voices to its library of AI-generated narrators. Users can enjoy books, articles, and other digital content narrated by these legendary voices on their phones. Liza Minnelli, Judy Garland's daughter, expressed much excitement about the partnership.

Why It Matters: The use of AI to recreate the voices of beloved actors opens up exciting possibilities for content consumption. However, it also raises ethical concerns, as AI-generated voices have been used inappropriately in the past, such as in political robocalls and unauthorized voice replicas. The new actor contract from the SAG-AFTRA strike last year includes provisions that ensure consent and compensation for the use of digital replicas. ElevenLabs' initiative represents a significant step forward in balancing innovation with respect for the legacy and rights of the artists.

AI technology brings impressive advancements, but it also equips scammers with new tools to deceive and exploit. Here’s what to watch out for and how to protect yourself.

Voice Cloning of Family and Friends

Description: AI-generated voices mimic loved ones, often requesting money or help.

Prevention:

Verify calls through known numbers.

Use a family "code word" for emergencies.

Be cautious of unknown numbers.

Personalized Phishing and Spam

Description: AI creates highly personalized phishing emails that appear legitimate.

Prevention:

Avoid clicking unknown links or attachments.

Verify suspicious emails with a second opinion.

Identity and Verification Fraud

Description: AI impersonates individuals to gain access to personal accounts.

Prevention:

Enable multi-factor authentication (MFA).

Monitor and respond to suspicious account activity.

AI-Generated Deepfakes and Blackmail

Description: AI creates fake images or videos for blackmail purposes.

Prevention:

Report incidents to authorities immediately.

Utilize evolving legal and private resources to combat deepfakes.

General Tips for Staying Safe

Check for Unusual Requests: Be cautious of unsolicited requests for sensitive information.

Verify the Source: Confirm the legitimacy of the sender through official contact information.

Look for Red Flags: Identify subtle grammatical errors or inconsistencies in AI-generated messages.

Use Multi-Factor Authentication (MFA): Add an extra layer of security to your accounts.

Educate Yourself: Stay informed about the latest scam techniques.

Your enthusiasm and curiosity drive our exploration through AI's rapidly evolving landscape. I look forward to your insights, questions, and assistance in spreading our discoveries with the AI community.

Until next Sunday, keep exploring and stay engaged!

Warm regards,

- Kharee