- AI Weekly Insights

- Posts

- AI Weekly Insights #18

AI Weekly Insights #18

Google's Gaffes and Reddit's Deals

Happy Sunday!

Welcome to the 18th Edition of “AI Weekly Insights,” your roundup of AI insights delivered at your convenience! This week we're diving into Gemini’s image gaffes, Reddit’s groundbreaking deal, and image generation leaps with Stable Diffusion 3.

The Insights

For the Week of 02/18/24 - 02/24/24 (P.S. Click the story’s title for more information 😊):

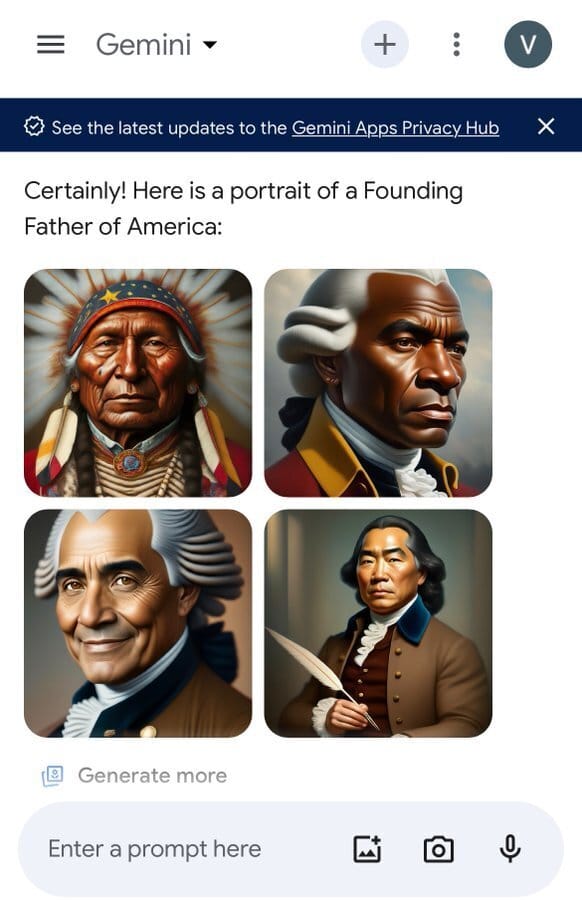

What’s New: Google had to hit the pause button on Gemini’s image generation capabilities due to concerns over historical inaccuracies in its output.

Inaccurate Histories: Earlier this month, Gemini showcased its new image-creating prowess, only for social media to highlight some glaring inaccuracies—like depicting the Founding Fathers as belonging to different ethnicities. Google praised Gemini for its broad image-creation abilities but acknowledged this hiccup, putting a temporary hold on the feature until it can be refined for re-release.

Why It Matters: The incident sheds light on the ongoing challenge of AI bias and the delicate balance of promoting diversity while ensuring historical fidelity. Google's approach likely aimed to diversify general depictions of humans, but underscores the need for accuracy and context sensitivity in history. The capability for AI to adapt its outputs based on user prompts is essential, but defaulting to historically accurate representations should remain a priority.

Image Credits: Gemini

What's New: Reddit is stepping into a major deal with Google, licensing its treasure trove of user content for AI model training.

Reddit Data: According to sources familiar with the matter, the deal is worth around $60 million per year. Reddit's vast discussions and user interactions are a goldmine for Google when it comes to online searches as people often want answers from others in similar situations. This comes ahead of news that Reddit is eying a public offering on the Stock Exchange soon.

Why It Matters: This partnership underscores the immense value of real-world, user-generated content in training more relatable and effective AI models. I have personally done online searches by adding “Reddit” at the end to improve results. Overall, I think this could develop in a few ways and we will have to see how this turns out.

Image Credits: Reuters

What's New: Stability AI ups the ante with the latest iteration of its acclaimed text-to-image model, Stable Diffusion 3.

Stable Diffusion 3: Boasting improved performance across a range of scenarios and enhanced spelling capabilities, this version is currently in early preview, accessible through a waitlist. Ahead of a wider release, the company is conducting more tests concerning safety.

Why It Matters: In a year when text-to-video generation is all the rage, Stable Diffusion continues to lead in the realm of AI-generated imagery. I’ve even talked about Stability AI’s video generation model in the past as well. As these tools become increasingly adept at creating content nearly indistinguishable from that made by humans, the importance of mechanisms to verify AI-generated content cannot be overstated.

Image Credits: Stability AI

AI History Corner

Natural Language Processing (NLP), a pivotal domain of Artificial Intelligence, has evolved remarkably from its inception, laying the groundwork for many of today's conversational AI systems. Let's embark on a journey through the key milestones in NLP development:

The adventure begins in the 1950s with the Turing Test, created by Alan Turing. This test challenged computers to mimic human conversation so well that people couldn't tell they were chatting with a machine. It was the first step towards dreaming of intelligent, conversational AI.

In 1966, a leap was made with ELIZA, developed by Joseph Weizenbaum. ELIZA was a basic chat program that could echo back your words in a way that felt somewhat conversational. It was like talking to a parrot with a psychology degree – not very deep, but surprisingly engaging.

The 2010s revolutionized NLP with the introduction of deep learning and Transformer models, like Google's BERT and OpenAI's GPT series. These technologies brought a new era of language understanding and generation, with more nuanced and context-aware interactions between humans and machines.

Today, NLP underpins a vast array of applications, reflecting the evolution from Turing's initial concept to complex, meaningful machine interactions. This journey through NLP highlights our relentless pursuit of making technology comprehend and converse in our language, turning science fiction into everyday reality.

Your curiosity fuels this exploration of the ever-evolving AI landscape. Share these insights with fellow AI enthusiasts 🔄. Got any thoughts? Questions? Comment or hit reply. See you next Sunday!

Warm regards,

- Kharee